Building the Matrix for Neurotech: Sam Hosovsky on the Future of Brain-Computer Interfaces

The Brain-Computer Interface (BCI) industry is under the spotlight, with major players like Neuralink, Synchron, Precision, Paradromics, and Motif emerging as frontrunners. However, alongside these prominent companies, a wave of innovative hardware and software creators is pushing the boundaries of what this industry can achieve.

One such innovator is uCat, a company dedicated to developing a Matrix-style universe for the clinical and real-world training of BCIs. This groundbreaking approach aims to revolutionize how BCIs are developed and refined.

In an exclusive discussion, Sam Hosovsky, CEO and Founder of uCat, shares his journey into neurotech and offers insights into the cutting edge of BCI development.

Sam Hosovsky, CEO and Founder of uCat.

Would love to know about your journey into neurotech. Tell me about your background pre uCat?

I suppose the journey started a long time ago. I've always been a computer scientist. Ever since I was a little kid, I started to code more than I would, I guess, talk to people. I was intrigued by the idea of whether the brain is entirely computational. Even today, that question remains at the forefront of my attention.

It wasn’t until around 2017, when I began exploring computational neuroscience and neuroscience in general, that I seriously tried to answer it. Up until then, I naively thought—maybe I could just build a brain from the ground up without studying the actual brain.

Then I came across an article from 2012. Just two words started my neurotech journey: Susumu Tonegawa. He’s a Nobel Laureate in immunology who later became a neuroscientist. You might remember the headlines at the time—he implanted fake memories of fear into mice.

In the experiment, a mouse had a stressful reaction to an artificially induced scenario. The mouse’s feet were electrically shocked, which created a particular fear pattern in its brain. This pattern was recorded and later stimulated optogenetically to induce the same behavior again. But this time, the mouse was no longer being shocked—yet it still displayed fear due to that stimulation pattern.

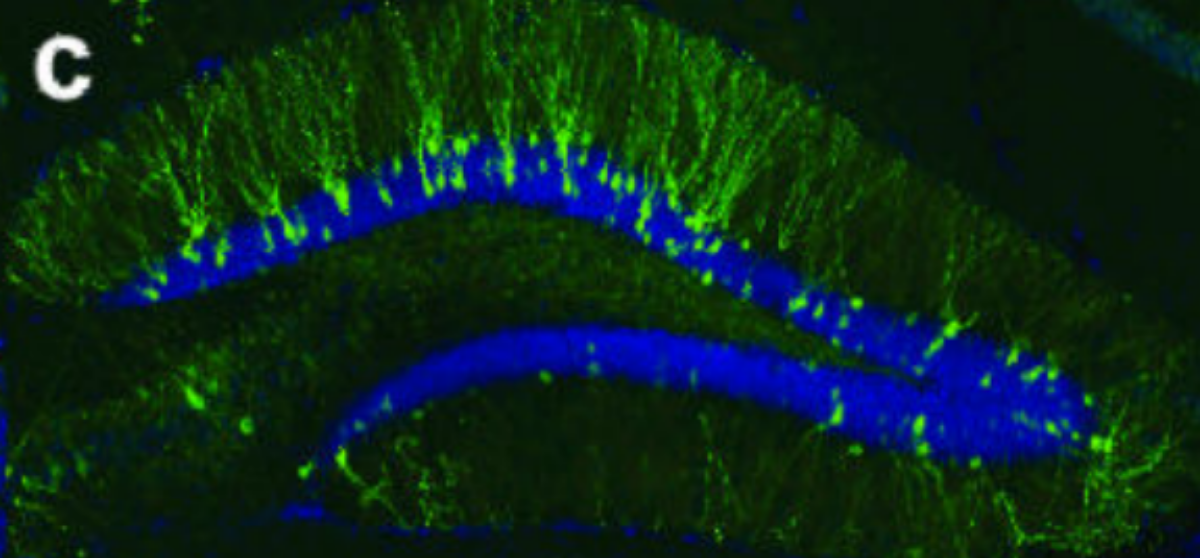

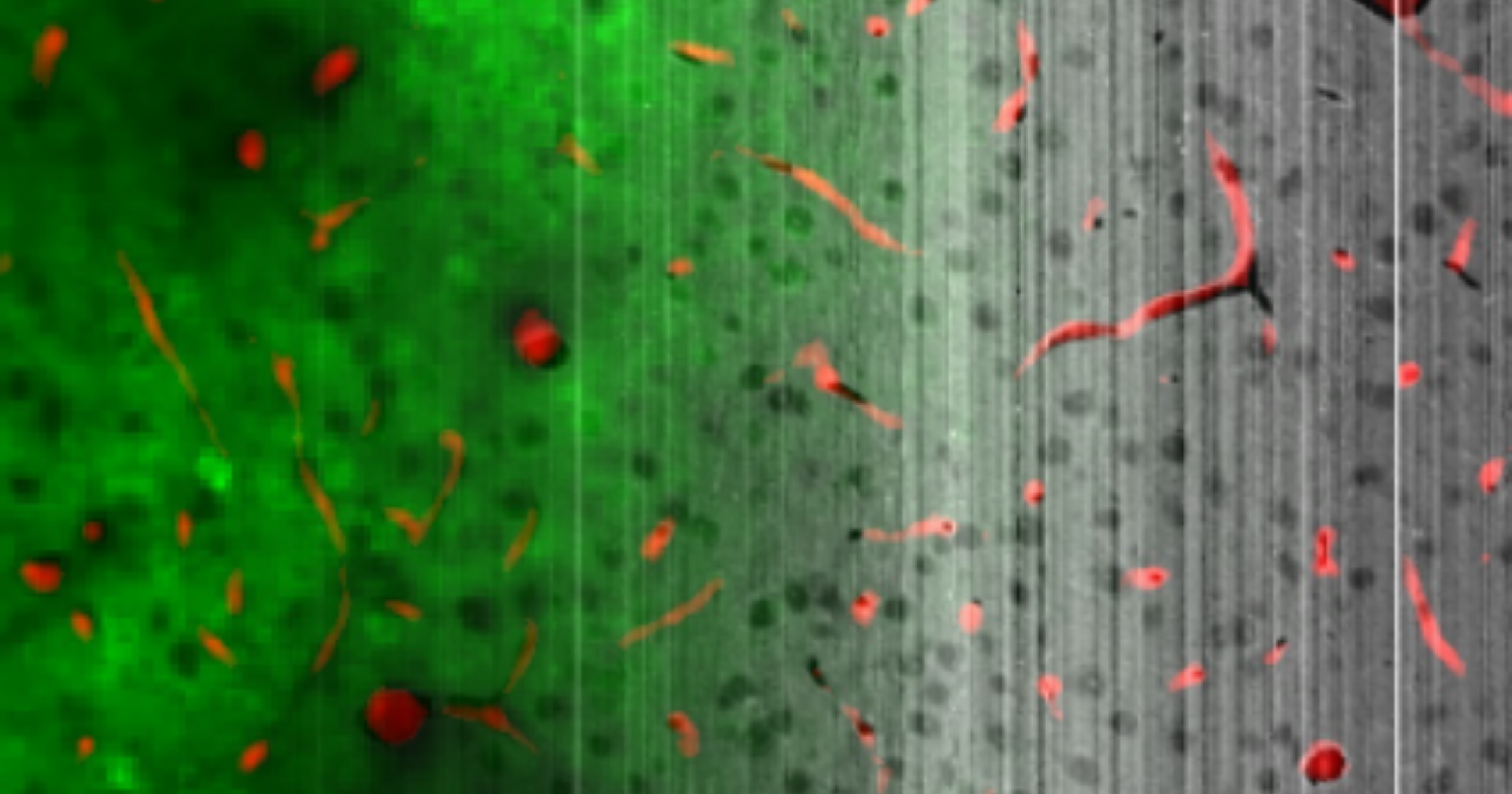

Visualization of a memory engram. Labelling of neurons with channelrhodopsin-2 that are active during a particular fear conditioning task. These cells were later stimulated with light to induce a fake fear response. Reprinted from Figure 2, Liu et al., 2012.

And I thought, hey, this brain of ours is extremely malleable. If we can tamper with basic emotions like fear by manipulating memories, what else is possible? Ever since then, I’ve been on a journey, looking deeper into the brain. The question became: what is it that we can modify in our brains using brain-computer interfaces to begin with?

I remember, those articles changed everything. My studies made me think: how far can we take this?

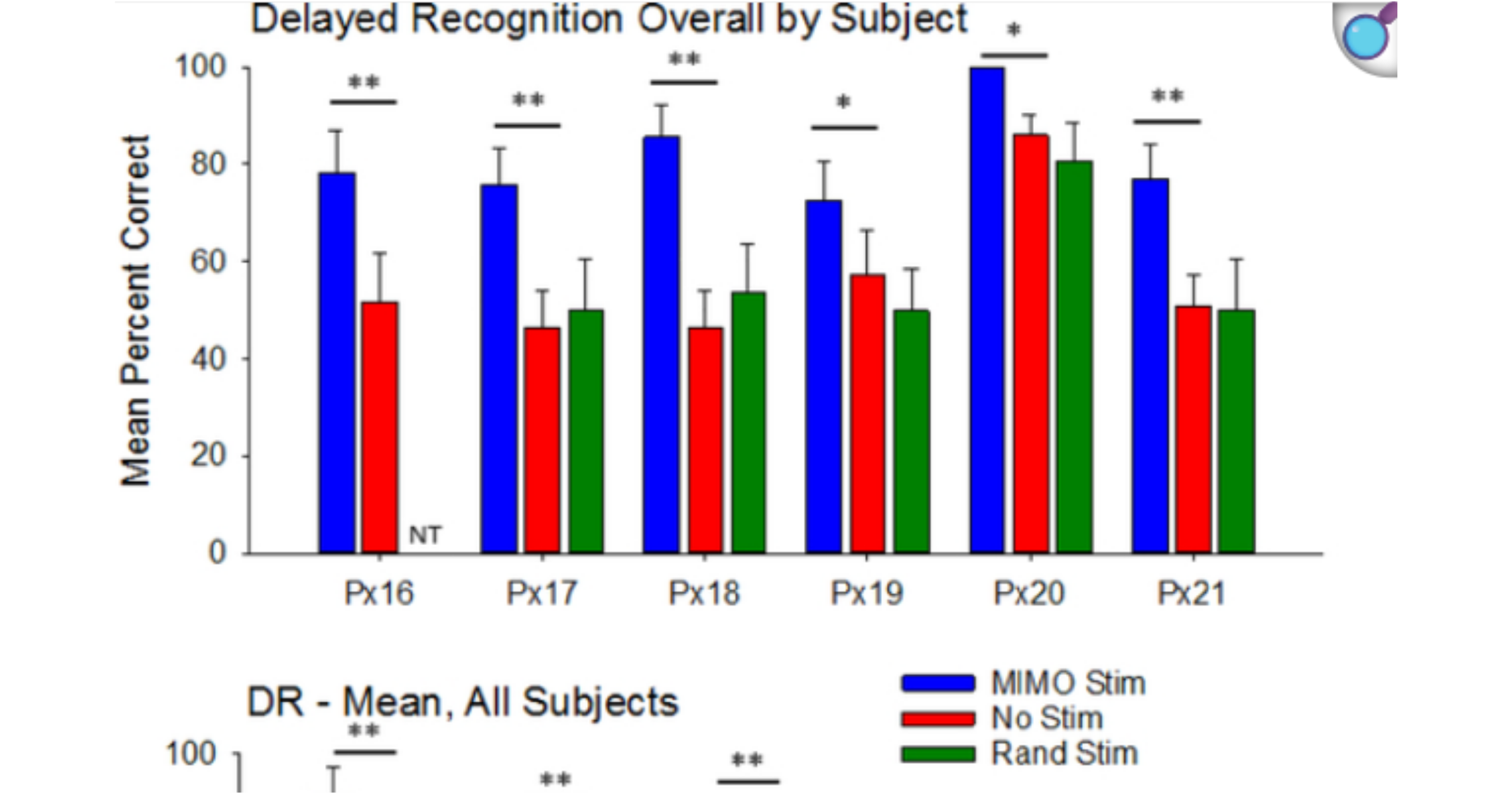

More fascinating papers followed. Researchers kept doubling down on this concept. But the lab that pushed me to go all-in on neurotechnology was Dong Song’s work in Ted Berger’s lab. They developed a human memory prosthesis. In their experiment, participants were shown images of objects while implanted with electrodes in two hippocampal regions: CA1 and CA3.

These regions encoded short-term memories of the objects through distinct patterns of brain activity. Dong Song trained a model to predict CA1 activity based on the upstream CA3 signals. Once trained, he showed participants new objects, but this time electrically stimulated their CA1 region based on the model’s predictions. Later, when asked to recall these objects, participants performed significantly better in memory tasks, over 30% better than those who didn’t have the system enabled and therefore didn’t receive the stimulation.

Remarkable results.

Reprinted from Figure 8, Hampson et al., 2019.

What and who inspired you to found uCat? Would love to learn more about the vision/mission

I think the plan has evolved over the years. I was always trying to merge things from the technological feasibility perspective into what is commercially viable. I want to work on memory prostheses, but these are very far from clinical markets, and so the natural exercise was to look at what these high-bandwidth BCIs are. What are they trying to interface with? What kinds of mental processes, cognitive processes, do they interface with to begin with? They're coming to market as platform technology, eventually scaling to all kinds of other mental processes.

The first clinical indication they tackle has to do with severe paralysis, because the motor cortex and premotor cortex have been studied extensively, and it's relatively well understood somatotopically. If you stimulate a particular part of the cortex, a specific movement of the body is executed. Or if you try to move a particular part of the body, you can see activity in the motor cortex. There’s this nice mapping, somatotopic mapping—which gave the first startups working on these BCIs everything they needed to try to commercialize the existing knowledge about that part of the brain.

uCat came in after one of the conferences, where a preprint of a 2021 paper by David Moses from UCSF (Chen’s lab) demonstrated that you can decode speech from people with paralysis who can no longer speak, at the level of whole words. This involved a very small vocabulary, just 50 words. From those 50 words, the system could decode speech with relatively high accuracy. So as they tried to say “Apple,” the word would be decoded by the neuroprosthesis.

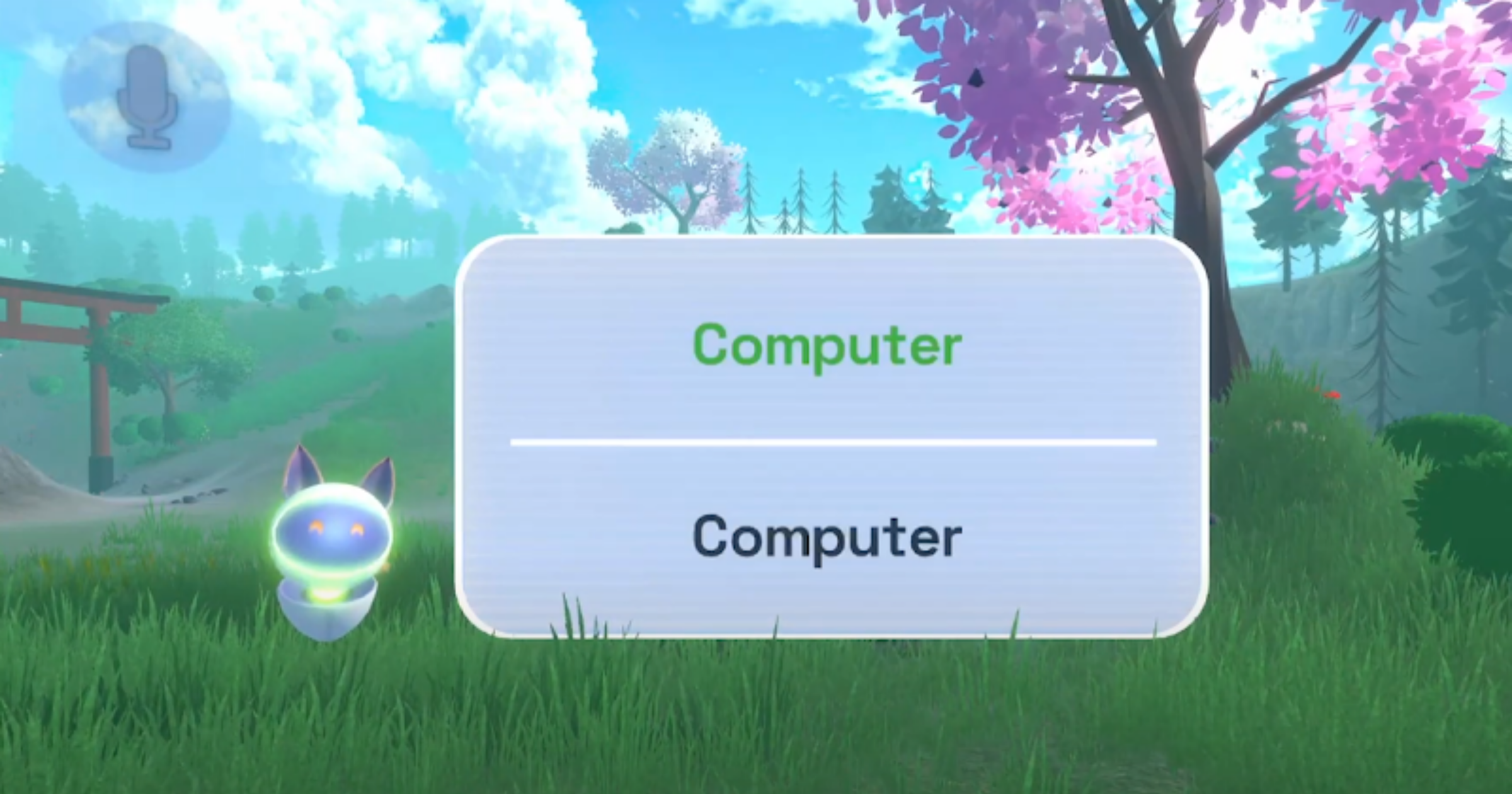

Eventually, I thought this could be one of the first clinical indications where people who are paralyzed and can no longer speak might now be able to speak full words, much faster than if they were typing them using assistive technologies. At uCat, we found a way to accelerate the development of these speech neuroprostheses by making it much easier for participants in clinical trials to get used to the system. Eventually, once these systems are clinically available, people with severe paralysis will be able to quickly train and use them to control their PC and type at natural rates.

This was the start of building a world around these systems.

An example of a uCat speech training task where the BCI user successfully repeats the prompted word “Computer” (top). Captured from uCat’s intro demo .

BCI’s are gaining a lot of media attention, but it doesn’t sound like you’re looking to build the next BCI firm. What has uCat evolved into since these initial inspirations?

You could think of uCat as being a layer-two kind of startup. The first layers are all these device manufacturers, the OEMs. These are the BCI companies and others who are making the implants. The question we are asking is: how can we make sure that the implants they're making and bringing to market are beneficial to the end user?

Another problem that became apparent was that people are not only trying to decode speech, they're also trying to decode arm or hand movement to control a cursor on the screen. This is another supposed value that the system can provide, but it wasn't really all that valuable compared to something like an eye tracker, which is completely non-invasive and costs around $1,000. I mean, now with iOS 18, I think every iOS device with a front-facing camera enables eye tracking for controlling its UI. So, how much better is a device that costs $60,000 and requires open-brain surgery? I mean, yes, you can click on things a bit faster, but is that really enough value to justify reimbursement? So that was the other problem.

We don't just have speech neuroprostheses coming to the clinical market. We also have motor prostheses to control movement. These, however, are used in similar patient populations. The biggest ones are stroke, high cervical spinal cord injury, or ALS (Amyotrophic Lateral Sclerosis). Many people suffering from these conditions have impairments in both speech and movement. So it only makes sense to create a speech-motor neuroprosthesis.

Now, how do we make it valuable? That was the main question for uCat. People who are paralyzed, nearly locked in, still internally process information the same way as everyone else. You think, but you cannot act. What you crave is to be as expressive as you once were, or as expressive as you would like to be, but your body doesn't allow it anymore.

So the evolution of uCat is that, from a speech prosthesis user interface, we actually began building a virtual reality avatar that you can command with these implanted BCIs. As you think of moving your legs, arms, and hands, and performing facial gestures, these will be mapped onto your virtual reality avatar. And then you get to move and express yourself the way you would like.

This was the key new improvement, or, I guess, pivot from uCat’s original focus about two years ago. That's something we've been trying to offer to the BCI startups as they're ramping up the clinical trials to bring the products to market.

An implanted BCI user with severe paralysis, taught by uCat to embody their virtual avatar by thoughts alone, is indistinguishable from other metaverse users they meet.

It sounds like proof of concept for BCI, to be able to showcase capabilities through an avatar prior to a prosthesis?

Ah, you're hitting on something even nicer that I didn’t mention. Yes, when I say neuroprosthesis, I just mean a piece of technology that replaces a particular function lost in the brain. It doesn’t control any prosthetic devices like robots or smart homes. We traditionally think about prostheses as fake arms. You're kind of hitting on that nail where I think a VR avatar is definitely a stepping stone. Because if you can express yourself as an avatar, well, think about what you can do, right?

You can start by doing digitally the things you would be doing anyway. We're having a call right now, so if you want to increase the information bandwidth and make a more personal connection, you would want to enable video. You would want to see the person next to you, right? There is value in person-to-person communication through physical presence.

And I know this is about virtual reality avatars. You may want to attend classes or give lectures to a wider audience and feel as if you're there. You can do all these things from VR. So, in some ways, you can see that the trend we're hitting on is also, I guess, the Metaverse economy. But you're absolutely right that it’s just a middle ground for something larger than that, because this is still limited to VR.

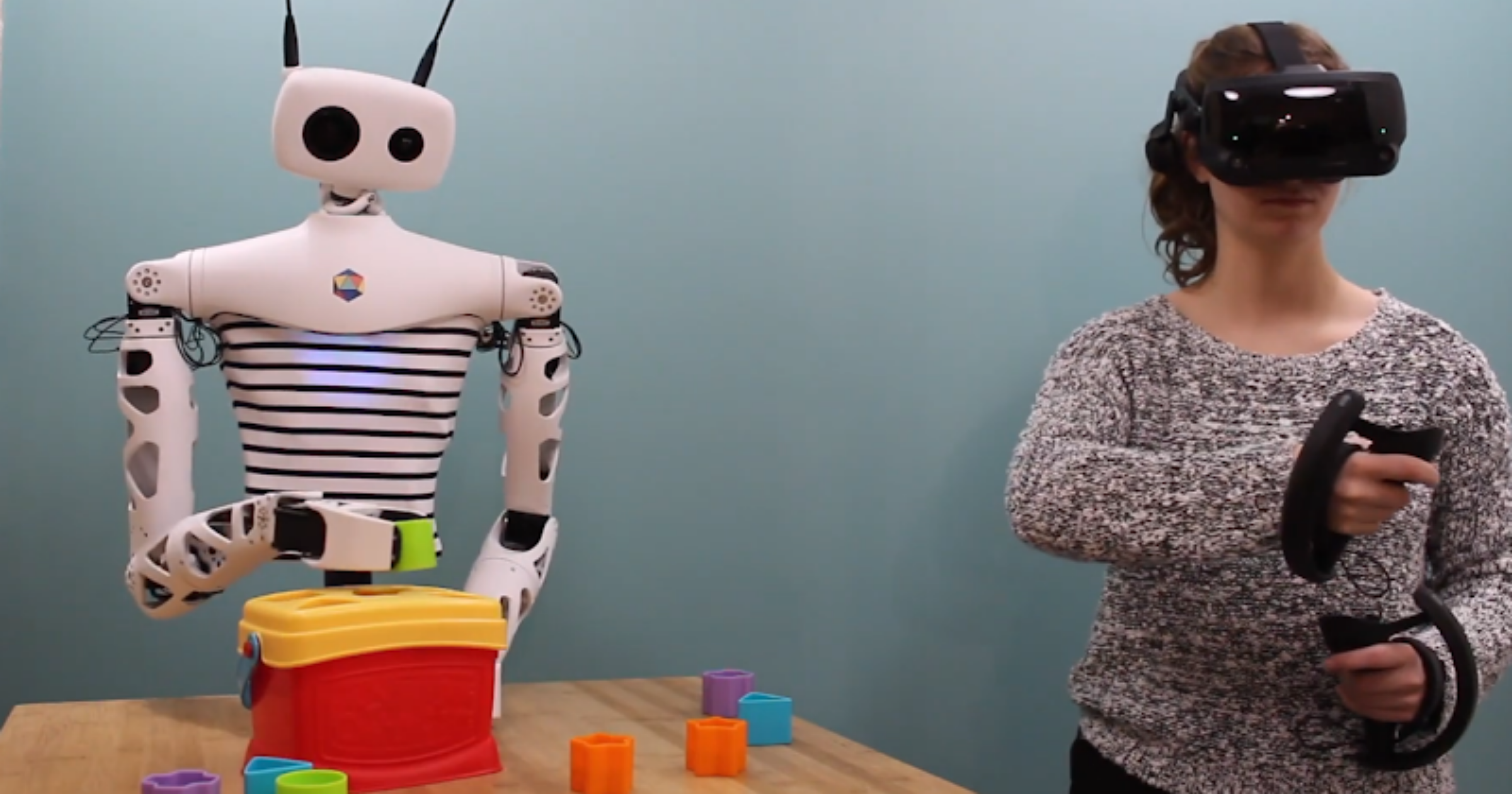

Yet the real value of BCI comes in when you no longer need to spend the cost on a personal assistant or caretaker. People with severe paralysis spend around $150,000 a year, per person, on just personal assistants who help them every day. You could minimize this cost by embodying a robotic avatar that coexists with you in the same physical space. If you can teleoperate this avatar through a VR headset, you could then take care of yourself to a much larger extent, thereby significantly reducing the cost.

This is definitely what a lot of this technology is converging on. It’s just that we’re not there yet. On the robotic side, why not start with simulated robots, a.k.a. virtual avatars, first?

Teleoperating the open-source Reachy robot from Pollen Robotics from VR in everyday environments and performing daily tasks. Watch on YouTube .

That's amazing. So your goal at the moment is to partner with companies in order to create a proof of concept and clinical trials, or is it as a complete separate entity?

Yeah, precisely. We are trying to get in as early as we can. Our core technology is creating avatars that are interoperable, meaning that one avatar can exist across thousands of Metaverse applications without requiring the user to do anything more. This is quite a challenge because it's not a standardized space. But we are trying to do this in a way that is hardware-agnostic. It doesn't matter which BCI technology we append ourselves to, we want to start with as many as possible.

The value at this stage, another small pivot that I came to realize, is that when people are building the decoders, whether Neuralink wants to control a virtual reality avatar or a keyboard or mouse, they want to improve the performance of their decoders. To do that, they need to instruct their participants as clearly as possible. This is a challenge because it’s really hard to give instructions in a way that is unambiguous and scalable to 200 participants.

Right now, we’re working with BCI companies to integrate virtual reality into their training pipeline. So a participant who is paralyzed but engaged in one of these trials puts on a VR headset and sees a virtual arm move in a specific, meaningful way, maybe a gesture from the elbow to the hand, or perhaps a hand gesture. They try to mimic that gesture exactly as they see it, as the phantom arm performs it.

This level of instructability is quite unique to VR, and it produces very clean datasets, very clean data samples, for those building the decoders. In this case, that’s Neuralink and other companies decoding motor intent. That’s how we try to stay relevant.

Very interesting, I love that. There’s so much talk about BCI at the moment, I saw an article recently about Neuralink trying to raise 500 million with an $8.5 billion valuation. It's obviously like the next hottest topic in neurotech, right now. How are you guys being received in that community, as, in a way, your work is on the fringes?

I think it depends on what the goals are. If the community strives for particular incentives and we're helping them achieve them, it works really well.

So this is our position. Half of our partners are foundations that represent patients, and half of our partners are people who are building BCIs or are researching them. So in this way, we are sort of a gateway for some organizations on how to design products that actually deliver the value that they need to deliver in order to be able to commercialize these products in the first place. I think we are still very much in the middle of it, in the thick of everything, just because of the fact that we are hardware agnostic. So it is important for my company to understand, to prognosticate, which hardware is going to be able to deliver the data that we need for our integration, and then partner with that provider.

So, yeah, you're right there that BCI is a pretty hot topic nowadays. There are all kinds of different modalities to interface with the brain, even if we just say invasively or through implanted BCIs. Which ones of those are going to survive the first five years, and which ones are even going to get to a reimbursement code from CMS? That's an open question.

What does the future hold for yourself and uCat?

Well, the first version of the product will really resemble something like what we've come to know from science fiction, as in The Matrix. This is the milestone we're working toward. As you imagine moving your entire body, your entire virtual body will move, and people with severe paralysis will benefit from it. So perhaps we can say that the first milestone to reach is ensuring that people with severe paralysis in social virtual reality applications are indistinguishable from able-bodied users. That's what we're striving for, and we have a number of research programs aimed at bringing us closer to this vision.

The space, in terms of decoding biomechanics from the motor cortical areas, is a bit fragmented. There is a lot of academic literature pointing to what we can already do, even with noisy samples and a limited amount of data. BCI companies have only recently begun heavily investing in machine learning to help extract the biomechanical data we can integrate with avatars.

In terms of timing, that's the key question. We are a layer-two company, and we’re monitoring how quickly companies are building BCIs and how fast decoder development is progressing. It looks like, in the next five or six months, we should be able to get comprehensive data, at least for upper extremity movement, from companies like Precision. By the way, Precision just received 510(k) approval last week to use their implants extra-operatively for 30 days for epilepsy monitoring.

So, these datasets are on the way. People will begin using them to create better decoders of user intent, how a person is trying to move. Once we reach the point where we have enough data, sufficient accuracy in the models, and diversity in complex behaviors, we’ll be able to apply that on top of avatars and, hopefully, reach a clinical audience as soon as the technology becomes available.

This is why we are working with BCI companies now, rather than later, so that by the time the BCI product is released, our interoperable avatar can launch alongside it.

That’s a nice little segue into the last question. With BCI’s dominating the headlines and Wearable Neurotech becoming more accessible to consumers as well as patients, where do you see the future of Neurotech?

The direct-to-consumer market is an interesting one. The real reason uCat exists is because I thought that projects like uCat could quickly accelerate our path to having high-performance, highly scalable, and high-resolution BCIs. And to my very first point in this interview, I am somebody who likes to understand whether the hypothesis for a pure computational brain is valid or not. So this is something I'm supporting directly.

But even in the invasive space, there are different modalities that we can tap into. The entirety of the last century, and the century before, has pretty much relied on bioelectronic interfaces. And now we see new modalities coming to light, like ultrasound. These are particularly insightful because they can be a lot less invasive and deliver similar resolution datasets that help us understand what goes on.

Now, on the non-invasive, direct-to-consumer side, this was another path. I thought, hey, maybe if the public understands or gets used to the concept of BCIs, this can accelerate the overall neurotechnology market. I kind of stepped away from that because we didn't have any compelling modalities beyond EEG for a long time. There was just nothing that I, for instance as an angel investor, was interested in supporting. Since then, new modalities of interfacing with the brain have entered commercial markets, like temporal interference, where different waveforms of alternating current are slightly offset from each other, creating an envelope in deep regions of the brain and allowing for transcranial deep brain stimulation.

There are phase-locked stimulations of the brain. So when you use EEG to record the brain, you can understand the dynamic evolution of its activity. You can then create stimulation, whether visual, auditory (e.g., playing a sound), or another type, that is adaptive to the activity your brain is currently producing. This came out of Ed Boyden’s lab. So there are these direct-to-consumer plays. There are non-invasive, focused ultrasound devices that can stimulate different parts of the body, even the peripheral nervous system, like the vagus nerve, and can elicit all kinds of interesting states. So I'm a bit more bullish now on the non-invasive, direct-to-consumer market. I think we're going to see some really remarkable use cases in these broader network disorders, mainly in mental health. These aren’t very localized to specific circuit activity.

But what excites me, the stuff that I'm really following as much as I can, is connectomics. A connectome is a synaptic-level reconstruction of the brain, essentially a structural scan at a resolution of 5 to 10 nanometers. And we are producing bigger and bigger datasets every day. We’ve had a connectome of the fly brain that maps the entire brain for quite some time. We have a cubic millimeter of the mouse brain. We have a cubic millimeter of the human brain. We, in fact, have a cubic millimeter of the mouse brain after we've first recorded its activity from about 100,000 neurons.

An illustration of a co-registered functional (calcium imaging) and structural (electron microscopy) dataset of a 1mm3 segment of the visual cortex of a mouse from the MICrONS consortium .

These kinds of data sets are just remarkably powerful in terms of what they can tell us about the process of cognition. I think they can translate into learnings about ourselves and to learnings about which parts of the cortex these high bandwidth, invasive BCIs should be placed in. And I think we are going to be inventing more ingenious ways of scaling these connectome data sets up. For instance, again, Ed Boyden’s work on expansion microscopy. Using just confocal microscopes, you can now image brains again at near 15-nanometer resolution by expanding the tissue before you image it.

These amazing approaches are going to give us very vast datasets of both structural and functional connectivity of the brain. We're going to learn so much about it, and then we're hopefully going to translate it into valid indications within the neurotechnology market. That's what I'm looking forward to.

Looking to Build a World-Class Neurotech Team?

Carter Sciences delivers personalized talent strategies backed by 20 years of international headhunting experience. We support growth-stage startups with tailored solutions and cost-effective fee structures, so you can scale without impacting your runway.

Specialisms include: Neurotechnology, Neuromodulation/Stimulation, Brain-Computer Interfaces, Wearable Devices, Neurosurgical Technology, and Private Equity/Venture Capital.

Contact Carter Sciences